Analytics

Experience

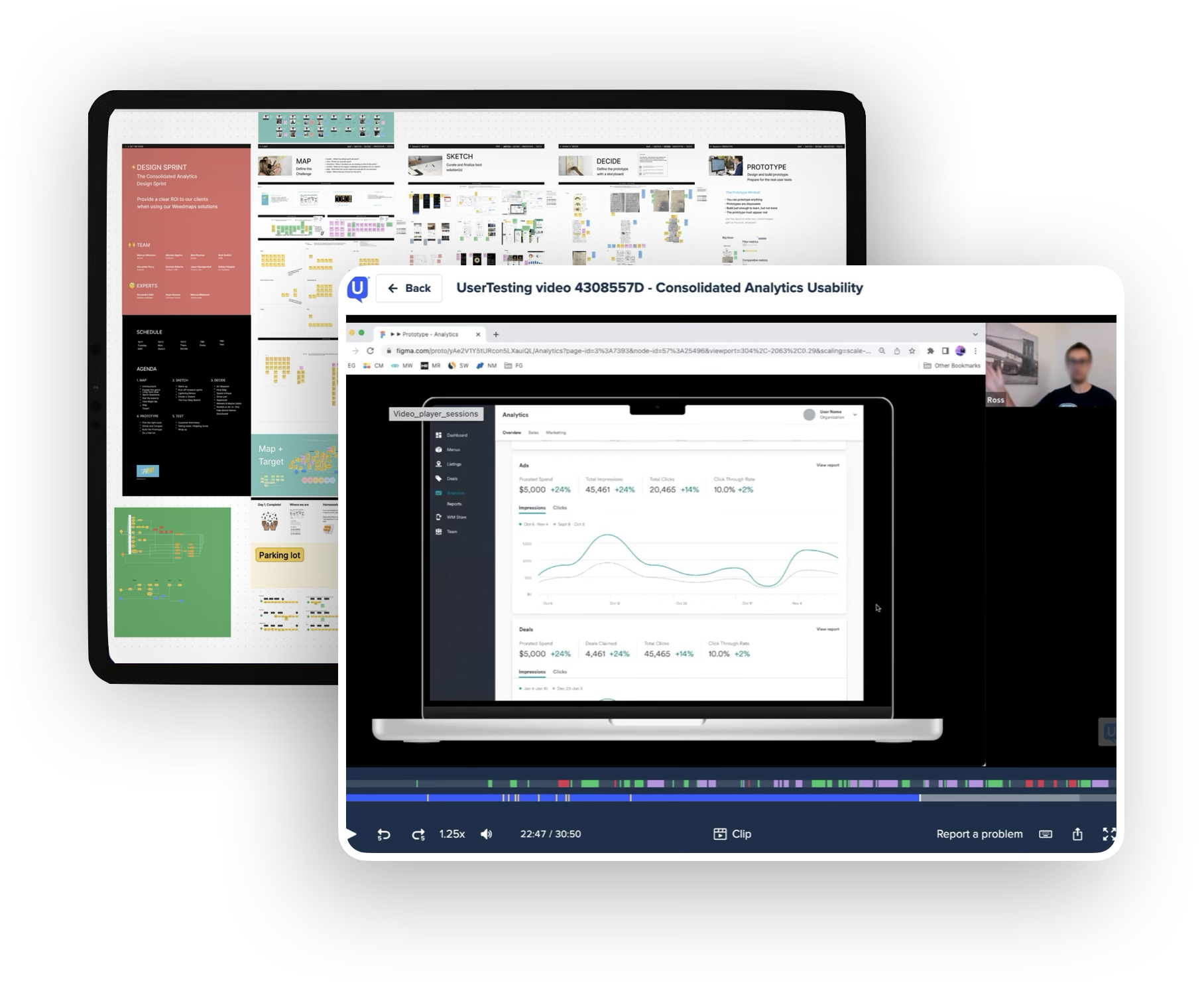

An end-to-end research study generating formative & evaluative insights through in-depth client interviews, contextual inquiry, & iterative user testing.

Project overview

Opportunity

Weedmaps business clients lack satisfactory access and navigability to the performance data & analytics they need to run their business successfully, with key performance metrics decentralized across various surfaces of the SaaS product suite.

Contribution

As the lead UX Researcher of the B2B team, I designed, conducted, and synthesized qualitative research to inform design strategy and kickstart ideation, then performed rapid iterative user testing to evaluate & optimize the user experience.

Outcome

An effective & enabling self-serve analytics solution tailored to the complex needs of retail business operators, increasing overall client satisfaction by 30% and reducing calls to Client Success teams by 20%.

-

Core team: UX Research, Product Management, Product Marketing Management, UX Design

Stakeholders: Client Success, Engineering, Product Analytics, Product Leadership, Design Leadership, Product Marketing Leadership

-

- Stakeholder interviews to gain insight into the problem and define the opportunity

- User interviews to understand the needs and expectations of clients and inform design strategy

- Rapid iterative testing & evaluation (RITE) to quickly refine the UX & UI for optimal comprehension, effectiveness, and ease of use

-

UserTesting, Figma, FigJam, Google Workspace (Docs, Slides), Atlassian (Confluence, Jira)

Brand introduction

WM Technology (Weedmaps) is a cannabis tech company connecting medical and recreational cannabis consumers to brands, products, retailers, and healthcare providers. With a B2B2C operating model, Weedmaps also offers the cannabis retailer community a robust SaaS product suite that empowers ecomm and brick & mortar retail managers to scale their business through omnichannel advertising, marketing, operations, and branded ecommerce.

The challenge

Within the Weedmaps for Business product suite, key performance analytics are decentralized or are unavailable on a self-serve basis. The fragmented experience makes seeing the big picture and making informed decisions extremely difficult for clients, and because they lack access to some crucial data, they have taken to calling their Client Success rep frequently for insight and guidance. This has had a substantial impact on the Client Success teams’s time. We needed to steer the product in the direction of user needs, but first we needed to understand those needs on a deeper level.

Research plan

Our research goals were to understand the needs and expectations of WM for Business clients, uncover gaps between their goals and the current experience, and then fill those gaps in the design process. This would require a multi-method approach in three phases to 1) understand the problem space, 2) generate insights to inform design and 3) test designs for optimization.

1) Stakeholder interviews

-

Understand the current landscape

Identify known knowns, unknown knowns, and known unknowns

Understand the impact this issue is having on internal teams

-

8 interviews dual-led by UXR and UXD

Product Manager & Product Marketing Manager for each WM for Business product

Sample of Client Success Managers

Product Analytics

Conducted via Google Meet

Note-taking in FigJam

-

Session scheduling

Discussion outline write-up

Collaborative note-taking prep

2) In-depth client interviews

-

Understand what clients need to run their businesses successfully & independently

Uncover underlying desires and expectations that are not being serviced today

Determine how client needs differ between account types and job functions

Learn firsthand about the frustrations and friction points clients have with the current experience

Develop a client hierarchy of analytics needs from must-have to nice-to-have

-

In-depth user interviews

6 WM clients, active users of WM product suite

Sample of single-location & multi-location business operators

Sample of various job functions including business owner, operations manager, and marketing manager

Conducted via UserTesting

Note-taking in UserTesting & FigJam

-

Research brief documentation

Sampling & recruitment

Session scheduling

Discussion guide write-up

Session setup & observer invites

Collaborative note-taking prep

Analysis & mapping plan

Reporting, publishing, & distribution protocol

3) Iterative user testing

-

Test designs iteratively for optimal comprehension and usability

Continuously refine designs based on feedback from test participants

Document any limitations or constraints of the experience to reference during post-release evaluation

-

Moderated user tests

8 WM clients, active users of WM product suite

Sample of single-location & multi-location business operators

Sample of various job functions including business owner, operations manager, and marketing manager

Conducted via UserTesting

Note-taking in UserTesting & FigJam

-

Task & question list

Prototype management

Sampling & recruitment

Session scheduling & setup

Documentation & feedback to design

Stakeholder interviews

In order to clearly define the problem that we would set out to solve, we needed to immerse in the landscape and quantify the current impact on teams. In partnership with UX Design, I conducted stakeholder interviews, gaining context and untangling intricacies of the current state, and establishing baseline metrics that we would aim to improve. Participants included Product Managers, Product Marketing Managers, Client Success Managers, and Product Analysts.

“It's very 'give a man a fish' right now. I feel like I spend more time answering one-off analytics requests [for clients] than anything else.” - Client Success Manager

Client interviews

With a clear understanding of the problem space and alignment on research objectives, I moved forward with phase 2, conducting six 60-minute client interviews over two days, dedicating the first half of each session to learning about their goals and motivations, and the second half to uncovering their behaviors and pain points through contextual inquiry.

I invited the core team and stakeholders to attend sessions as anonymous observers and encouraged note-taking in a pre-prepared workspace in FigJam using a color-coded notes system to categorize client sentiments as painpoints, needs, and opportunities.

After data collection, I led the core team in an affinity diagramming activity, grouping notes into clusters to identify themes. This collaborative exercise allowed us to measure the frequency with which concepts arose, gauge the overall sentiment toward each, and prioritize accordingly.

A sample of key insights

Consolidation of information

Clients unequivocally want to see their most crucial business metrics from across WM products all in one place for easier monitoring.

Grasping metrics & impact

Clients struggle to decipher analytical jargon and seek contextual definitions or a glossary for better comprehension.

Control over data

Clients unanimously value the ability to download and export raw data, and withholding this capability raises concerns about data integrity.

Comparing to baseline

Clients need context by comparison to understand their current business performance vs. previous time periods and/or vs. market trends.

Filtering & segmentation

Clients want to filter their data by variables like date & location (if applicable), and create segments using multiple attributes at once.

Knowing their customer

Clients want more information about customer demographics, shopping behaviors, and overall satisfaction rates.

Design/test sprint

Armed with insights, the core project team embarked on a 5-day design sprint to expedite ideation, wireframing, and testing. By day three, we had a hi-fi prototype and a comprehensive list of key interactions & tasks for testing. I conducted a series of 15 rapid iterative usability tests, evolving questions and tasks to reflect changes and between tests. Participants included 6 WM clients, 5 Client Success Managers, and 4 proxy users (i.e. users who are not actually WM clients, but are operators of small/medium retail businesses with very similar goals and SaaS product needs), due to restricted client access.

Outcome & impact

According to an analysis of call logs and satisfaction surveys, the new analytics experience contributed to a 2x increase in positive CSAT ratings and reduced inbound calls to Client Success Managers by more than 50%.

Guided by qualitative research, contextual inquiry, and iterative usability testing, the shipped solution effectively addressed as many identified client needs as possible within technical and business constraints and created a backlog of future enhancements, such as introducing diagnostic, prescriptive, and predictive analytics to the dashboard experience.